- GRC Engineer - Engineering the Future of GRC

- Posts

- ⚙️ Compliance-as-Cope: How GRC Engineering Automated the Wrong Thing

⚙️ Compliance-as-Cope: How GRC Engineering Automated the Wrong Thing

As a GRC industry, we leveraged APIs and scripting to spark what became a revolution. We followed the path of least resistance. Here's why GRC Engineering is risking becoming shelfware.

📄 We Built Something Impressive

GRC Engineering took off when cloud APIs made evidence collection programmable. We built around what was already commoditised: AWS SDKs, GitHub actions, GitLab CI, Kubernetes logging APIs.

Control tests ran continuously. Evidence flowed into platforms automatically. Compliance dashboards updated in real-time. Audit prep went from weeks to hours.

The engineering was real. The automation was real.

But what exactly did we automate?

You wouldn't write API documentation before writing the API.

You wouldn't document a function before implementing it. Yet in GRC, we routinely map to compliance frameworks before understanding what controls we actually have.

We've inverted the natural order and it's costing us clarity, speed, and truth.

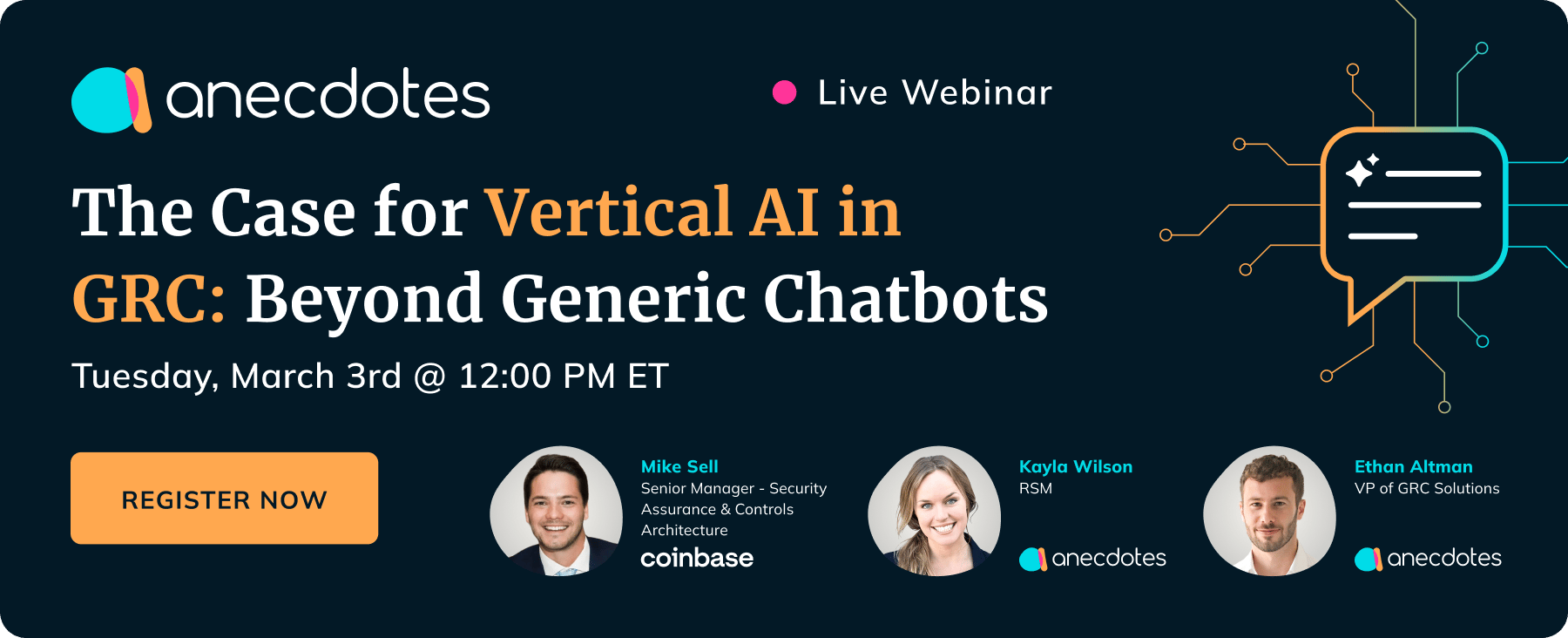

IN PARTNERSHIP WITH

Horizontal AI knows about GRC, but can it help you run your program?

In this webinar our panel of experts will discuss the case for AI purpose-built for GRC and deliver a demo of Anecdotes’ ChatGRC - the single command center where you can manage your entire program.

What We Actually Commoditised

The pipeline from evidence collection → control testing → audit prep. The predictable part.

AWS APIs → Automated security group validation

Kubernetes logs → Continuous RBAC auditing

GitHub audit trails → Automated code review tracking

Cloud infrastructure → Real-time compliance monitoring

This is the part where tooling already exists, APIs are documented, and the problem space is well-understood. You can hire engineers to build it. You can buy platforms that do it out of

the box.

Meanwhile, the questions that actually drive business decisions stayed untouched:

Where should we spend our security budget?

What's our real exposure to third-party risk?

Which controls have actual leverage versus compliance theatre?

How do we model the business impact of security incidents?

These remained manual spreadsheets, gut feeling, and colourful matrices.

Why?

POV: You asked ChatGPT about GRC Engineering and started “building”

The Structural Reason

Evidence collection has clear interfaces. Risk quantification doesn't.

You can grep AWS logs. You can't grep "what's our actual third-party risk exposure."

Think of it like building a CI/CD pipeline for a codebase that doesn't exist. You've automated the tests, the deployments, the monitoring, but you never wrote the application. The pipeline is impressive engineering. It runs perfectly. It just doesn't produce software.

That's what GRC engineering looks like right now. The evidence pipeline runs beautifully. The risk quantification layer was never built.

Risk quantification requires:

Probabilistic modelling (most GRC engineers don't know statistics)

Business context (security teams often lack business visibility)

Cross-functional coordination (risk spans organisational boundaries)

Ambiguity tolerance (risk has irreducible uncertainty)

Evidence collection requires:

API documentation

Data pipeline engineering

Dashboard building

One is well-defined and implementable. The other is hard, ambiguous, and politically uncomfortable.

So we built what was tractable. And because most GRC programmes start from compliance requirements — SOC 2, ISO 27001, customer questionnaires — the engineering naturally followed that

path. We automated what compliance frameworks asked for: evidence. Not what the business needed: risk decisions.

And then something subtle happened.

We mistook the automation for maturity.

I call that...

Compliance-as-cope.

What Real Risk Engineering Looks Like

If we started from risk instead of compliance, GRC engineering would look radically different.

Instead of red/yellow/green matrices, you'd have probabilistic models:

# Monte Carlo simulation for vendor breach exposure

risk_exposure = monte_carlo(

threat_scenarios=vendor_breaches,

control_effectiveness=mfa_bypass_rate,

business_impact=revenue_loss_distribution,

iterations=10_000

)

# Output: 90th percentile annual loss = £2.4M

# vs "High Risk" in a spreadsheetThis isn't theoretical. This is what actuaries do for insurance. What quants do for trading. What GRC engineering should do for security.

And people are building this.

Tony Martin-Vegue has been the loudest voice in this space for years — his newsletter is one of the best resources for understanding cyber risk quantification from a practitioner's perspective, not just academic theory.

His upcoming book Heatmaps to Histograms is literally titled after this exact transition: moving from the colourful matrices we've normalised to

the statistical models we actually need.

The fact that this book needs to exist tells you how far behind the industry is. If you're serious about risk quantification, start there.

Beyond probabilistic modelling, real risk engineering would include:

Control Leverage Analysis:

Statistical analysis of which controls actually reduce incidents

Cost-benefit modelling for security investments

Automated detection of redundant or low-impact controls

Business Impact Modelling:

Automated business process dependency mapping

Revenue impact scenarios for different attack vectors

Regulatory fine probability and cost modelling

Notice the difference? This is engineering that reduces risk. Not engineering that generates compliance artefacts faster.

But if it's so clearly better, why hasn't anyone built it at scale?

The Effort Mismatch

Quantify the gap.

Evidence collection automation for SOC 2:

3 months engineering time

Saves 40 hours/year of manual audit prep

ROI: Clear, measurable, easy to justify to leadership

Risk quantification for security budget allocation:

Never attempted at most organisations

Would inform £2M+ annual security spend

ROI: Massive, but harder to scope and pitch

We invested 3 months of engineering into saving 40 hours a year. We invested zero into informing how millions get allocated.

The evidence automation ROI fits on a slide. The risk quantification ROI requires explaining probability distributions to a CFO. One gets funded. The other gets deprioritised.

We optimised the wrong thing. Not because we're bad engineers, but because the incentive structure rewards tractable problems over important ones.

If GRC programmes started from risk instead of compliance, everything downstream would change. Job descriptions would say "risk quantification engineer" instead of "GRC automation engineer."

Tool categories would shift from evidence collection platforms to risk modelling platforms. Success metrics would measure reduced risk exposure, not automated evidence percentage. Hiring profiles would prioritise statistics and modelling skills over API integration skills.

The entire industry would look different.

The Path Forward

Evidence collection automation isn't wrong, it's just insufficient. Automate evidence when you have a functioning security programme, when audit prep is genuinely a bottleneck, and when you've already invested in risk quantification. But if you don't know where to spend your security budget, evidence automation is premature optimisation of the wrong problem.

Here's what actually matters:

Acknowledge that evidence automation ≠ risk management. Audit hygiene is necessary but it's not programme maturity. Stop confusing the two.

Invest in risk quantification. Start with Monte Carlo simulations for your top 5 risk scenarios. Read Tony Martin-Vegue's work. Move from heatmaps to histograms — literally.

Measure control effectiveness, not just control existence. Which controls actually prevented incidents last year? If you can't answer that, your evidence pipeline is measuring the wrong thing.

Question your starting point. If your GRC roadmap is driven by compliance frameworks, you're probably building cope. If it's driven by quantified risk exposure, you're probably building something valuable.

Hire for the hard problem. Risk quantification needs statistics and modelling skills, not just API integration skills. The talent profile is different.

Real GRC engineering maturity: when your risk models are as automated as your evidence collection.

Until then, you're not engineering risk. You're engineering sales enablement.

Did you enjoy this week's entry? |

That’s all for this week’s issue, folks!

If you enjoyed it, you might also enjoy:

My spicier takes on LinkedIn [/in/ayoubfandi]

Listening to the GRC Engineer Podcast

See you next week!

Stop typing prompt essays

Dictate full-context prompts and paste clean, structured input into ChatGPT or Claude. Wispr Flow preserves your nuance so AI gives better answers the first time. Try Wispr Flow for AI.

Reply