- GRC Engineer

- Posts

- ⚙️ Engineer Your GRC Process Before You Automate It

⚙️ Engineer Your GRC Process Before You Automate It

Most GRC teams automate broken workflows and wonder why outputs stay broken. GRC Engineering starts with process design, not tools.

IN PARTNERSHIP WITH

Automating GRC: A practical guide for security teams

Regulations are increasing across the board. Teams are stretched across numerous projects. And manual work involved can overwhelm even the most well resourced teams.

Learn how your team can overcome the challenges of today’s fragmented, manual GRC processes in this Tines practical guide. Get access to four opportunities for immediate impact, and inspiration from teams at Druva, PathAI, and more.

📣 Engineer Your Process Before You Automate It

Last week: Fixed your AI prompts.

This week: Audit your workflows before automation touches them.

Most GRC teams waste months building automation on top of broken processes. They automate chaos, then wonder why outputs are still rubbish.

The process audit comes first. The automation comes second.

Let's fix this.

The 30-Minute Process Audit Before Automation

Recently, I watched a team spend two weeks vibe-coding building an evidence validation system. Beautiful architecture. Clean code. Completely useless.

Why? Their underlying workflow couldn't answer:

"What makes evidence audit-ready?"

They had:

3 different people using 3 different quality standards

No documentation of what "complete" meant internally vs. for external certs

Decision criteria that lived only in people's heads and tribal knowledge

Outputs that varied wildly depending on who did the work

So their automation learned to replicate inconsistency at scale and repeat the patterns of who ever vibe-coded the app.

The system worked perfectly. The workflow was broken.

Here's the audit that would have caught this in 30 minutes.

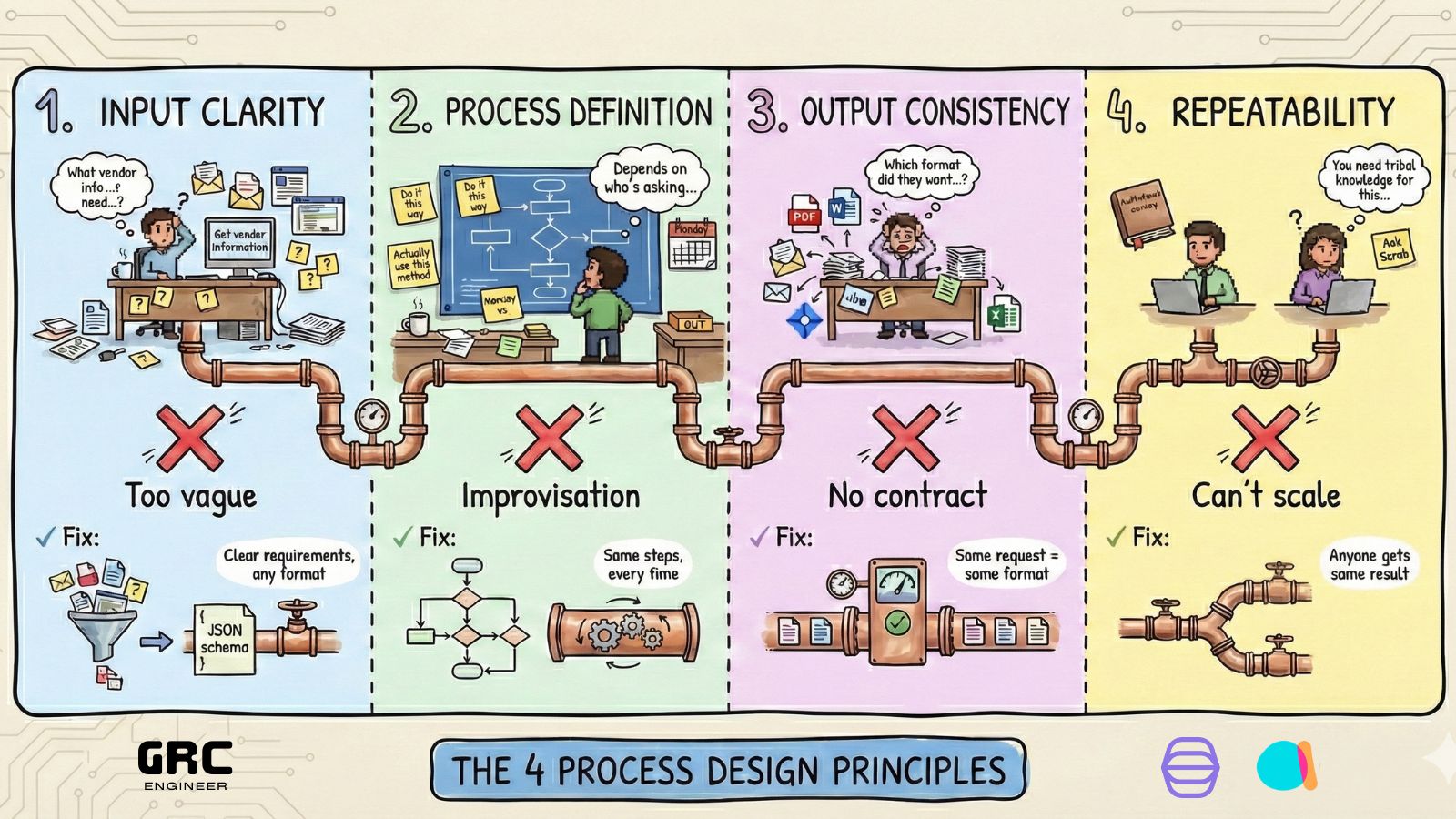

The 4 Process Design Principles

The foundation: What every GRC workflow needs before automation touches it

Before automating anything - whether it's AI, scripts, or platform workflows - your process needs these four qualities:

1. Input Quality: Do you have consistent data definition?

Can you define the exact data format and structure for every input?

Bad: "We need information about the vendor"

Good?

{

"vendor_name": {

"type": "string",

"required": true

},

"primary_contact": {

"type": "string",

"format": "email"

},

"services_provided": {

"type": "string",

"enum": ["SaaS", "IaaS", "PaaS"]

},

"data_classification": {

"type": "string",

"enum": ["Public", "Internal", "Confidential", "Restricted"]

},

"contract_end_date": {

"type": "string",

"format": "date"

}

}This is your basic data layer. If your inputs aren't structured and consistent, your process starts with chaos.

2. Process Definition: Is your process always the same?

Can you document every step so it runs identically each time?

Bad: "We assess the risk based on experience and adjust as needed"

Good (sorry I’m using a qualitative example here):

Step 1: Score impact (1-5) using data classification matrix.

Step 2: Score likelihood (1-5) using threat intel checklist.

Step 3: Multiply for risk rating.

Step 4: If rating ≥15, escalate to security lead

If your process changes based on mood, day of week, who’s on PTO, who’s asking or who's doing it, it's not a process - it's improvisation.

3. Output Consistency: Do you always get what you request?

When you ask for a specific output format, do you get that format every time?

Bad: Sometimes you get a PDF, sometimes a Word doc, sometimes an email summary, sometimes a Jira ticket - depending on who's working on it

Good?

{

"vendor_name": {

"type": "string"

},

"risk_rating": {

"type": "integer",

"minimum": 1,

"maximum": 25

},

"findings": {

"type": "array",

"items": {

"type": "string"

}

},

"recommendations": {

"type": "array",

"items": {

"type": "string"

}

},

"review_date": {

"type": "string",

"format": "date"

}

}Same request = same output format, same structure, every single time.

If your outputs vary when your requests don't, your workflow has no contract.

4. Repeatability: Can anyone execute this with minimal context?

Could someone new pick up this process and get the same outcome?

Bad: "You need to understand our risk appetite and company culture to do this properly"

Good: "Follow steps 1-10 in the runbook. All decision criteria are documented. New analyst completed this in 90 minutes with 95% accuracy"

If your workflow requires tribal knowledge, institutional memory, or "you just need experience," it can't scale.

All things equal, two people following this process should arrive at the same outcome.

Without strong repeatability, you won't be able to be ready for AI-powered workflows anyway.

IN PARTNERSHIP WITH

Enterprise Agentic GRC Built for Builders

Not impressed with AI buzzwords? Good.

Meet Agent Studio, Data Studio, ChatGRC and Anecdotes MCP, built for skeptics like you. Use these new capabilities to deploy agents that run on your data, understand GRC context, and execute complete workflows.

The 30-Minute Audit Template ⏰

Copy-paste: Run this on any GRC workflow

Do not leverage LLMs to run this, you’d literally defeat the purpose.

Pick one workflow, run the audit, fix one thing in the worst performing category.

That’s it.

# WORKFLOW AUDIT CHECKLIST

Workflow Name: _________________

Owner: _________________

Last Updated: _________________

## INPUT QUALITY

□ I can define exact data formats for all inputs

□ Input structure is documented (types, enums, formats)

□ Data validation rules exist and are enforced

□ Missing or malformed inputs are caught immediately

## PROCESS DEFINITION

□ I can document this process in ≤10 steps

□ Steps are identical regardless of who executes them

□ Decision points have specific, measurable criteria

□ Process has been followed successfully by 2+ people

## OUTPUT CONSISTENCY

□ Same request produces same output format every time

□ Output structure is defined (schema, template, format)

□ Output variations are intentional, not random

□ I can programmatically validate output structure

## REPEATABILITY

□ Same inputs produce same outputs (>90% match)

□ Process doesn't require tribal knowledge

□ New person can learn this in <2 hours

□ Process failures are documented and fixed

SCORE: ___/16

0-8: Fix workflow before automating

9-12: Workflow ready for basic automation

13-16: Strong foundation for advanced automation

How This Looks Across Different Workflows?

The same principles, applied differently

Risk Assessments:

Input: Asset data (structured fields), threat intel (categorised), business context (defined attributes)

Process: Impact matrix (documented scoring) → Likelihood assessment (checklist) → Control evaluation (scoring rubric) → Rating calculation (formula)

Output: Risk record with fixed schema: rating, justification, controls, owner, review_date

Repeatability: Two analysts score within 1 point using same inputs

Policy Writing:

Input: Framework requirements (mapped controls), current controls (inventory), stakeholder needs (documented)

Process: Template selection (decision tree) → Requirement mapping (checklist) → Legal review (approval workflow)

Output: Policy document following standard template with consistent sections

Repeatability: Policies follow same structure, depth, and approval path

Evidence Collection:

Input: Control requirement (ID), data source (API endpoint), validation criteria (rules)

Process: API call → Data transformation (script) → Quality check (validation rules)

Output: Evidence record with metadata: timestamp, source, validation_status, artefact_link

Repeatability: Fully automated, runs identically every execution

Dashboard Creation:

Input: Data sources (endpoints), stakeholder questions (requirements), refresh cadence (schedule)

Process: Query definition (SQL) → Data aggregation (transformation) → Visualisation rules (config)

Output: Dashboard with defined metrics, thresholds, and refresh schedule

Repeatability: Updates automatically following same logic each time

Same principles. Different applications.

Next Week 📅

The next: let’s build on top of this next week!

Validation Frameworks That Actually Work

You've engineered your process. Now you need to validate it.

Next week: validation frameworks that catch bad outputs before they reach stakeholders.

Simple rules to prepare for automation.

Did you enjoy this week's entry? |

That’s all for this week’s issue, folks!

If you enjoyed it, you might also enjoy:

My spicier takes on LinkedIn [/in/ayoubfandi]

Listening to the GRC Engineer Podcast

See you next week!

AI-native CRM

“When I first opened Attio, I instantly got the feeling this was the next generation of CRM.”

— Margaret Shen, Head of GTM at Modal

Attio is the AI-native CRM for modern teams. With automatic enrichment, call intelligence, AI agents, flexible workflows and more, Attio works for any business and only takes minutes to set up.

Join industry leaders like Granola, Taskrabbit, Flatfile and more.

Reply