- GRC Engineer

- Posts

- ⚙️ The Zillow Effect in GRC: When Platforms Perform Control Testing

⚙️ The Zillow Effect in GRC: When Platforms Perform Control Testing

How GRC automation shifted from evidence storage to active control assessment, and what that means for your audit relationship and your GRC Engineering practice

IN PARTNERSHIP WITH

Automating GRC: From checkbox burden to strategic advantage

Manual audits, endless evidence collection, siloed tools – sound familiar? This new guide from Tines shows how leading teams are automating GRC to reduce risk, streamline compliance, and free up valuable time.

Learn how teams at PathAI, Druva, and more are accelerating audits, improving visibility, and strengthening compliance with automation.

Meet Olivia, a Director of Compliance at a Series B SaaS company.

Last quarter, she hired a reputable-ish audit firm for their SOC 2 audit. £80k for what she thought would be comprehensive security assessment.

The auditor logged into their GRC automation platform account, clicked through the green checkmarks, asked three clarifying questions, and issued a clean report.

"Wait," Olivia asked, "aren't you going to test the controls?"

"We did," the auditor replied. "The tool already tested them. We validated the tool’s testing."

Olivia just paid £80k for someone to validate a platform's automated assessment. The auditor didn't bring methodology, testing approach, or independent judgement. They brought attestation of work the platform already did.

Welcome to the Zillow effect in GRC.

Why It Matters 🔍

The why: the core problem this solves and why you should care

When Zillow launched, estate agents lost control of property information. Buyers could see listings, market data, and comparable sales themselves. The information advantage that justified 6% commission disappeared. Agents shifted from information gatekeepers to transaction specialists, and fees dropped to 2-3%.

The same shift just happened in GRC, but the implications go deeper.

A decade ago, enterprise GRC meant £800k-1.2M for Archer or ServiceNow implementation, plus £100-200k annually for Big 4 audit firms. Deloitte would send a team of six for five weeks. They'd design evidence collection methodology, build testing approaches from scratch, assess every control independently, and deliver comprehensive findings. The audit firm controlled everything, and you paid premium prices for that control.

Today the economics are inverted. Modern platforms cost £70-200k annually. Audit fees range from £10k for checkbox attestation to £80k for quality validation. But here's what changed beyond just pricing: the audit firm that used to send six people for five weeks now sends two people for two weeks. They're not doing less work because they got more efficient. They're doing less work because the platform already did it.

Total spend dropped from £1M+ to under £300k. Software captured the value that used to flow to audit firms. This enabled a wave of boutique audit firms to emerge. A 5-person firm can now compete with Big 4 because the platform handles operational heavy lifting. Lower overhead, faster turnaround, competitive pricing. New audit firms launched specifically to leverage platform economics.

But unlike Zillow, which just made information accessible, GRC platforms went further: they started making compliance judgements.

This is where GRC Engineering becomes critical. The GRC Engineering manifesto advocates for embedding compliance into systems. Platforms executed that vision with vendor methodology instead of your methodology. You've embedded compliance (good) but outsourced the thinking to a SaaS vendor (problematic). Real GRC Engineering means understanding testing logic in your systems, not trusting someone else's logic.

Strategic Framework 🧩

The what: The conceptual approach broken down into 3 main principles

Principle 1: Platforms Went From Storage to Opinion Engines

Old platforms stored evidence. Archer and ServiceNow had no opinion about control effectiveness. The auditor brought methodology and formed the opinion that mattered.

Modern platforms are different. When automation platforms connects to AWS, pulls IAM config, and declares "MFA control: Operating effectively," it just tested a control and formed an opinion. That used to be exclusively the auditor's job.

The platform isn't just collecting evidence. It's applying testing logic (often machine-readable, JSON-based) to determine control effectiveness. It's making compliance judgements that used to require human expertise and professional scepticism.

{

"old_platform": {

"function": "Store evidence",

"opinion": "None",

"auditor_dependency": "100%",

"value": "Organised storage"

},

"new_platform": {

"function": "Collect + test + form opinions",

"opinion": "Effective/ineffective per platform logic",

"auditor_dependency": "30%",

"value": "Continuous assessment + methodology",

"risk": "If platform logic is wrong, everyone trusts it 💀"

},

"reality_check": {

"before": "Auditor was your compliance brain",

"after": "Platform is your compliance brain",

"auditor_role": "Validates the brain (hopefully)",

"question": "Who validates the validator? 🤔"

}

}This is what happens when your compliance brain becomes software. And software doesn't ask uncomfortable questions unless you programme it to.

The platform shift from passive to active enabled everything else: the pricing changes, the boutique audit firms, and the quality problems we're about to explore. Once platforms started forming opinions, the entire audit industry restructured around that new reality.

Principle 2: Information Parity Shifted Power to Practitioners

Before platforms, auditors controlled compliance information. You didn't know if controls were effective until they tested and told you. You couldn't see how your programme compared to peers. You had no visibility into evidence quality before the audit. Information asymmetry gave auditors pricing power.

After platforms, everything changed. Your Vanta dashboard shows you control effectiveness in real-time: which controls are passing, which are failing, and exactly why. You see benchmark data comparing your implementation to similar companies. You understand evidence gaps weeks before the audit. You can track control drift and get alerts when effectiveness degrades.

The auditor arrives to validate information you already have, not to discover information you don't. That's fundamentally different from the old model where the audit was when you learned about your compliance posture.

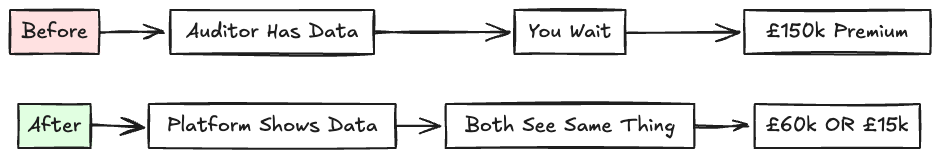

Your platform shows you what auditors will see during fieldwork. The discovery phase that used to take 40+ hours is now 4 hours of platform review. Auditors went from building assessments from scratch to validating pre-built assessments. That's why fees dropped 60-90%.

This connects to central data layer: when compliance data is continuously assessed, external validation gets cheaper because you're not paying someone to discover what you already know.

Principle 3: Platform Opinions Enabled Checkbox Audits

Because platforms form opinions on control effectiveness, a new business model became viable: provide attestation without independent testing.

This couldn't exist before. When auditors had to design tests, collect evidence, and form opinions from scratch, you couldn't deliver credible SOC 2 audit for £15k. The work required 200+ hours.

The checkbox audit playbook: log into platform, review green checkmarks, ask questions, sign report. Platform did 90% of work. Auditor provided 10% validation and 100% of signature.

Auditor Behaviour | Fee | What You Get | Risk |

|---|---|---|---|

Questions platform, tests independently | £60-80k | Real validation | Low |

Reviews outputs, basic validation | £30-40k | Platform trust | Medium |

Checks green marks, signs | £10-15k | Signature only | High |

This is the GRC Engineering paradox: platforms automated best practices (continuous testing, machine-readable controls), but can't automate critical thinking about whether tests prove what they claim. That requires humans who understand platform logic AND organisational risks.

IN PARTNERSHIP WITH

The Compliance OS for Modern GRC Leaders

Audits are no longer one-off, they’re constant, complex, and costly. Legacy tools add chaos, but Sprinto is the Compliance OS built for modern GRC leaders. It automates evidence collection, reuses proof across frameworks, and keeps compliance always-on.

The impact: 60% faster audit readiness, 100% risk oversight, and confidence for boards and regulators, all without scaling headcount. Compliance stops being a firefight and becomes a predictable business function.

Execution Blueprint 🛠️

The how: 3 practical steps to put this strategy into action at your organisation

Step 1: Audit Your Platform's Testing Logic

If your platform forms opinions on control effectiveness, you need to understand the logic behind those opinions. Most GRC leaders never look under the hood.

Request detailed documentation from your platform vendor:

How exactly do you test MFA control effectiveness? Not just "we verify MFA is enabled" but show me the actual checks: Do you verify it's enforced for all users? Do you check for bypass mechanisms? Do you validate backup authentication methods? What about service accounts?

What configuration does your automated testing actually check vs what it assumes exists?

What passing criteria do you apply and why those specific thresholds?

For critical controls, map the gaps. Take your MFA control as example. Your platform might check that MFA is configured in your identity provider. But does it verify MFA is actually enforced at application level? Does it catch when developers bypass MFA for "temporary" API access that becomes permanent? Does it identify service accounts that don't support MFA but have privileged access?

Real scenario: A company's platform showed "MFA control: Operating effectively" for 18 months. Their auditor (quality firm, not checkbox) asked to see the enforcement policy. Turned out MFA was configured but not enforced for administrator accounts because it "broke some legacy admin tools." Platform never tested enforcement, only configuration. The gap existed in plain sight.

Output: Documented list of controls where platform testing is insufficient for your specific environment. This becomes your scope for deeper validation.

Connects to designing controls where compliance is afterthought: design for your threats, not just for passing platform tests.

Step 2: Configure Platform for Independent Validation

Most platforms are designed for you to see compliance status, not for auditors to test independently. This creates friction when auditors want to validate methodology instead of just reviewing outputs.

During platform setup or renewal, configure for audit independence:

Enable auditor data extraction (API access, raw exports, underlying evidence retrieval)

Verify auditors can pull data for their analysis tools

Ensure platform doesn't lock auditors into platform-only assessment

Test auditor access flow before actual audit cycle

Output: Platform configuration enabling real audits, not just output validation. Your auditor should work with your platform, not be constrained by it.

Step 3: Question Platform Methodology With Your Auditor

The critical conversation happens between you, your platform, and your auditor about testing methodology. This doesn't happen automatically. You orchestrate it.

Before audit fieldwork:

Share platform testing logic documentation with auditor

Ask: "Which controls need independent testing beyond platform review?"

Build validation plan for platform blind spots

During planning:

Schedule time for methodology questioning

Enable access to underlying systems for spot-checking

Discuss which platform assumptions need validation in your environment

Question for auditor: "Which platform control tests will you independently verify vs validate based on methodology alone?"

Their answer reveals whether they're thinking critically about platform logic or just accepting it. For ex-auditors leading GRC: you remember when your firm's methodology was standard. Now ensure your auditor questions platform methodology with the same rigour you once applied.

Output: Audit plan identifying where platform testing suffices vs where independent validation required.

Connects to control orchestration: platform and auditor as integrated compliance system.

# Platform_evaluation.py

# Copy these questions for your next vendor call

class PlatformEvaluation:

def __init__(self):

self.red_flags = []

def test_methodology_questions(self):

"""The questions that reveal if platform actually knows what it's testing"""

return [

"How do you test [specific control]? Show me actual logic, not marketing.",

"What edge cases in our environment does your testing miss?",

"What happens when automated testing fails or returns inconclusive?"

]

def auditor_compatibility_check(self):

"""Can auditors actually validate or just trust your dashboard?"""

return [

"Can auditors extract underlying evidence for independent analysis?",

"Does your platform support auditor methodology or force them into yours?"

]

def detect_red_flags(self, vendor_response):

"""Run these checks during the call"""

if "industry best practices" in vendor_response:

self.red_flags.append("Vague answer - they don't know their own logic")

if vendor_gets_defensive_about_auditor_access():

self.red_flags.append("Hiding something or poorly designed")

if vendor_has_no_real_failure_examples():

self.red_flags.append("Platform might not actually catch issues")

return len(self.red_flags) == 0 # True = proceed, False = run away

# If vendor fails this evaluation, no amount of features or integrations will fix itThe Bottom Line

Zillow created information parity. GRC platforms went further: they started forming opinions on control effectiveness.

That shift created new economics, new market structure, and new risks (checkbox audits providing attestation without assurance).

For ex-auditors leading GRC: You remember when your firm controlled methodology. That world ended. Platform controls methodology now. Your job is ensuring platform methodology is rigorous and auditors validate it instead of trusting it.

The power shifted to platforms. Navigate deliberately. Audit platform testing logic, configure for independent validation, question methodology with auditors.

Attestation without validation is expensive theatre. You deserve better.

Did you enjoy this week's entry? |

That’s all for this week’s issue, folks!

If you enjoyed it, you might also enjoy:

My spicier takes on LinkedIn [/in/ayoubfandi]

Listening to the GRC Engineer Podcast

See you next week!

Free, private email that puts your privacy first

Proton Mail’s free plan keeps your inbox private and secure—no ads, no data mining. Built by privacy experts, it gives you real protection with no strings attached.

Reply